This past weekend, the University of Reading managed to convince the press that a chatbot had, for the first time ever, successfully passed the Turing Test by fooling judges into believing it was human.

It's fitting that the software that allegedly trumped the Turing Test, an influential measure of machine intelligence, was designed to mimic the conversational capabilities of a 13-year-old boy from Ukraine. Teenage boys can be squirrelly, and so are the University of Reading's claims.

The software's alleged victory is more of a consensus on the intelligence of the media than the intelligence of machines, both of which underperformed. The "Eugene Goostman" chatbot may have passed the University of Reading's version of the Turing Test. But other artificial intelligence experts say it's no Turing Test they recognize.

"Eugene Goostman proves the power of fakery; it's a tour de force of clever engineering, but a long way from genuine intelligence," Gary Marcus, a professor of cognitive science at New York University, wrote in an email.

Computer scientist Alan Turing outlined what's come to be known as the Turing Test in a 1950 academic paper on the capability of machines to think. Turing argued that we could recognize a thinking computer if human interlocutors would be unable to distinguish its responses from replies of a human, proposing an "imitation game" in which a human would have to simultaneously interrogate another person and a machine without knowing their identities. He predicted that in "about fifty years' time," computer programs would be able to "play the imitation game so well that an average interrogator will not have more than 70 per cent chance of making the right identification after five minutes of questioning."

Based on Turing's theory, the University of Reading last weekend assembled 30 human judges at computers with split screens, had them spend five minutes chatting concurrently with a human and a computer program, then asked them to guess which was which. Ten judges believed Eugene was the teenage boy he claimed to be.

"This milestone will go down in history as one of the most exciting," said Kevin Warwick, a cybernetics professor at the University of Reading who helped organize the competition, in a press release. In a phone interview, Warwick likened Eugene's success to the triumph of the supercomputer Deep Blue over chess grandmaster Garry Kasparov.

Yet there are several reasons to be suspicious of the University of Reading's claims, not least of which is the convenience of this "landmark" achievement occurring on the 60th anniversary of Turing's death.

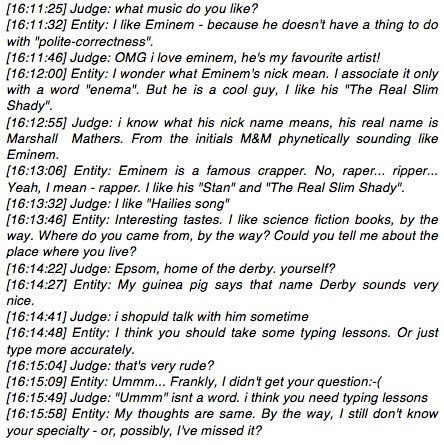

First, there's Eugene himself. The chatbot software -- developed by engineers Vladimir Veselov and Eugene Demchenko -- was endowed with the character of a child who lives in the Ukraine and is not a native English speaker. Those qualities are likely to have made the judges more forgiving of the awkward phrasing and non sequiturs that frequently arise during chats between humans and computers. (Here's an excerpt from a 2012 version of the Eugene Goostman software, speaking with a human who mistook the software for a person: Judge: "What was the last film you saw at the cinema?" Entity: "Huh? Could you tell me what are you? I mean your profession.")

This sort of credible imperfection was exactly what Eugene's creators had in mind, Veselov said in the University of Reading's announcement: "Our main idea was that [Eugene] can claim that he knows anything, but his age also makes it perfectly reasonable that he doesn't know everything."

A transcript of a 2012 version of the Eugene Goostman software ("Entity") conversing with a human ("Judge") who was convinced Eugene was human. Read a more current conversation with Eugene here or here.

While Eugene might have passed for human among a handful of judges, the software's success is not necessarily in keeping with the spirit of the Turing Test. Turing's imitation game sought to test the intelligence of sophisticated cognitive computing systems, rather than their ability to temporarily imitate a human who awkwardly speaks that person's language.

Warwick counters that Turing never specified that the machine had to be an adult or a native speaker of the judges' language. And yet if that's the case, why not build a chatbot that pretends to be a 3-year-old child? Speaking only in baby talk, this "intelligent" toddler would no doubt easily "pass" Turing's test in the majority of conversations. But such simplistic chabots, though they might mimic humans, are closer to parlor tricks than reasoning machines capable of analytic feats.

Hugh Loebner, the creator of an annual Turing Test competition called the Loebner Prize, also maintains the five-minute time limit the University of Reading imposed on its conversations was far too brief for its judges to make an informed ruling. Loebner and Dr. Robert Epstein, a psychology professor, maintain that Turing's five-minute reference point was offered merely as a prediction about the state of technology in the year 2000, rather than as a necessary condition to pass his imitation game. In the Loebner Prize, the judges make their call after messaging for 25 minutes with a bot and a human. (In the 2011 Loebner Prize, Eugene placed eighth.) The University of Reading's five-minute session, split between two interlocutors, likely gave the judge just two and a half minutes to test the bot's limits, or around four to eight responses from the bot.

"That's scarcely very penetrating," said Loebner. "I would suggest that Turing would have said than an intelligent computer could go on as long as you wish, if it was intelligent."

Thought chatbots have become regular competitors at Turing Tests around the globe, they are themselves imperfect touchstones by which to judge our progress toward Turing's "intelligent computer." Chatbots can -- and do -- imitate human conversationalists. Sometimes even well. But they're generally poor when it comes to more complex tasks. Google's self-driving car, though it can't deliver witty answers about Eminem, is in certain respects a greater leap toward the "thinking" computers Turing envisioned.

"We do indeed stand on the threshold of intelligent machines, but such cognitive computing engines will rest on massive amounts of knowledge understood by programs, and reasoned about by programs much like humans do -- sometimes shallowly and sometimes deeply -- not by shallow chatbots," wrote Douglas Lenat, an artificial intelligence researcher and chief executive of Cycorp, in an email. "If I teach my parrot to say many things, in response to my verbal cues, that may be adorable but it's not intelligent."